More than a decade ago, in another life, I wrote an article for the South African version of US ‘hipster’ business magazine, Fast Company.

This was obviously before ChatGPT, but the fear of an out of control AI was already being expressed by many tech luminaries; a fear almost matched by a measure of ‘utopianism’ concerning machine-enabled human superpowers and immortality.

But contra the sentiments expressed by Musk et al, even in a hipster publication, I managed to express an opinion that because consciousness is immaterial, some kind of humanoid computer can never be built. This would be true even if an ‘organic’ machine mimicking a brain were to be possible. And obviously a mechanical, metal machine could not even get close. I referred to atheist philosopher of mind Thomas Nagel in this regard, who had famously abandoned materialist Darwinism as he concluded the mind was not reducible to the brain and was thus not a product of blind and random mutations.

Much of this illusion of AI comes to us from the fallacious ‘mind body’ problem, begun by Descartes. But the truth is there is simply no reason to imagine your body as a kind of machine with a ghost pilot lodged within. ‘We’ do not live in a fleshly cabinet. We are beings in the world and our souls are, as per Aristotle, the forms of our bodies. This is why Christianity has always taught the necessary resurrection of the body if we are to live eternally.

Additionally, we know now that our guts are a kind of second brain, and that nerves will register electrical activity before the brain apparently sends a signal.

This all means that to build a human mind, you first need to build a human being. Therefore you cannot build a mind, because you cannot build a human being.

As I point out below, we do already use a kind of non-conscious AI in many ways. The question is whether we can take a quantum leap to artificial super intelligence that is conscious.

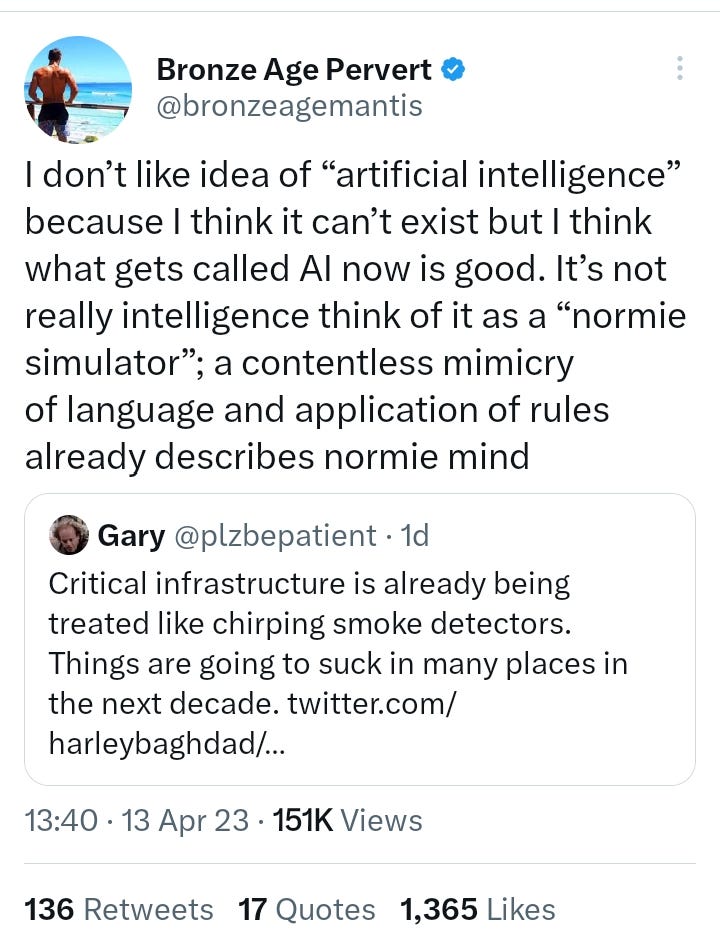

I say no. Instead, for better or worse, we will get this:

Better AI will hopefully end mind-numbing fake jobs, and ‘search engine’ style AI will concomitantly expose the fraudulence and banality of so much written content and modern thought, as it fakes ‘normie’ opinion so easily and seamlessly.

The peril of AI is something different from the supposed machine dominance of a super robot. It is rather the peril of big data machines being used as tools to ‘nudge’ us towards lockdown-style destruction and dystopia.

For a 2015 description of these concepts as they were forming in my mind, and as filtered through hipster business magazine style, read the condensed version of the aforementioned article below…

In the past few months, Elon Musk, Bill Gates, and Stephen Hawking have all issued warnings to the scientific community regarding the potential threat of developing artificial intelligence (AI).

The warning stems from a belief in the Law of Accelerating Returns – that technological developments will increase exponentially as they feed off each other.

In the case of AI, the artificial narrow intelligence in existence today (think smartphones, chess-playing computers and so forth), could, according to the likes of Musk et al, quickly develop into artificial general intelligence (a human-like intelligence not yet in existence – think of the computer HAL in 2001: A Space Odyssey).

This where the problem arises.

If an artificial general intelligence (AGI) is created, such an intelligence could easily deploy itself in service of creating an artificial super intelligence (ASI), which, unencumbered by biology, and operating with an intelligence far beyond human capabilities, could decide to refuse to be switched off and then embark on a perfectly rational mission to kill humanity in pursuit of a programmed goal, such as ending spam email, or filling the world with paper clips.

And so Musk is warning of 'our biggest existential threat as a species', while Hawking says 'the development of full artificial intelligence could spell the end of the human race.' Musk is also putting his money where his mouth is by donating $10 million to Vicarious and DeepMind Technologies (owned by Google); two firms committed to developing AI in an ethical and responsible fashion.

Director of Engineering at Google, Ray Kurzweil, is a firm believer in the close emergence of ASI, yet Kurzweil is largely sanguine about this eventuality.

Kurzweil suggests that we are reaching a new epoch in history, which he calls the singularity, in which humans and machines will merge, immortality will be achieved, and a new non-biological cosmos will begin as humans upload themselves onto new hyper-intelligent machines.

For Kurzweil this eventuality is merely a matter of time.

However one perceives AI and ASI – as a burgeoning golden age or as a potential extinction event – the future spelt out by our modern day prophets is nothing short of astonishing. Could it possibly be true that humans could create minds vastly more intelligent than ourselves? Can a mind be non-biological?

It is indisputable that at this present moment we have machines that can out-perform humans in a multitude of tasks. In fact, that has been true from at least since the Industrial Revolution.

We currently have computers that can out-perform us in playing chess, answering general knowledge questions, translating languages amongst other things.

These abilities have by and large provided seemingly endless opportunities for innovation in business and service delivery to consumers.

Indeed it is incredible how quickly modern society has unequivocally embraced artificial intelligence. The vast majority of people are entirely comfortable with on-board computers on aeroplanes, motor vehicles, and mobile phones – computers which all undoubtedly employ artificial intelligence.

Yet another advance in this long tradition of rapid development is currently under way in South Africa, where Metropolitan Health has deployed IBM's Watson in their customer service division.

Watson is a super computer capable of answering questions in natural language. It rose to fame in 2011 when it competed in the US quiz show Jeopardy!, beating out two former winners of the show to win a $1 million prize. Watson works by sorting through large amounts of data in order to produce answers to questions in a human fashion.

Metropolitan's 'adoption' of Watson vividly demonstrates the great potential of AI in dynamically opening up new avenues of service and product development.

Watson has already been deployed commercially in health care when, in 2013, IBM partnered with US Memorial Sloan Kettering Cancer Centre to help doctors treat cancer patients. The computer was able to search vast volumes of published cancer literature and case histories in order to make informed suggestions about possible treatments.

In this regard, it is completely understandable why researchers are moving full steam ahead to unlock all the benefits of this next step in computing.

So why then the doom and gloom offered by the likes of Musk, Gates, and Hawking?

The issue is the belief that the leap from informational machine to a kind of consciousness wherein real decision making and conceptualisation abilities are conferred on a non-biological machine is both possible and imminent. (In Watson's case, it is clear the computer merely sifts data – it has no real independent thinking ability of its own.)

If this is the case, a situation is created in which the Law of Accelerating Returns is married with the Law of Unintended Consequences on the grandest possible scale.

But there are many dissenters from the view point of prophets of doom such as Musk, as well as the optimistic futurists like Kurzweil.

Many maintain that the possibility of achieving ASI is simply non-existent.

For some thinkers, this scepticism is predicated on their view of the complexity of the brain, and the so-called 'anti-progress' of neuroscience as it discovers more and more how little is known concerning the relationships between synapses and neurons and subjective thought.

Early computer theorists like Alan Turing posited that the brain is nothing but a highly complex machine, the software of which simply awaits a more advanced science in order to penetrate its mysteries. Yet current research suggests that if Turing was right, then the brain is a machine, a piece of matter, unlike any other. So much so, that modelling it non-biologically may be impossible.

However, University of Cape Town computer researcher, Dr Geoff Nitschke, believes that a re-working of current computer architecture, from silicon circuit boards and electric transistors, to an explicitly brain-mimicking neuromorphic architecture, may theoretically allow computers to eventually become deep-learning, artificial brains.

'This means that the computer will be made up of artificial neurons and neural tissue engineered from replicated organic materials that make up biological central nervous systems. In such a neuromorphic computer the electrical impulses firing on organic connections between the artificial neurons would be the software, and the physical network of neurons would be the hardware.

'Replicating a biological brain perfectly with a neuromorphic computer could conceivably give rise to the intangible “something more” of our minds, or self-awareness that we associate with consciousness.'

Nitschke is quick to point out that this would by no means be a foregone conclusion even if the right bio-technology was developed.

'However, if the computer remains a disembodied entity, like a brain in a jar, then consciousness seems unlikely since thousands of years of evolution of brains together with our bodies seems to have played a key role in developing the capacity to problem solve, reason, speak, experience a lifetime of events and perhaps to even be self-aware.'

Nitschke pours water on the idea that ASI is therefore inevitable.

'The idea that ASI machines are an inevitable result of current technological progress in AI research is a fallacy. Examples of advances in AI are frequently cited in the media, but these typically do not address the ultimate goals of AI, to produce machines with intelligence comparable to our own and potentially far beyond.'

Another problem in the narrative of an ASI on-the-horizon, is the contention that surrounds what consciousness actually is.

Nitschke notes that the broad disagreement surrounding the essence of consciousness makes it very difficult to envisage how it could be created.

Indeed, philosophers of a classical bent continue to insist that a human-like artificial intelligence is categorically impossible because consciousness is in fact immaterial.

The most famous of this set is the New York University professor, Thomas Nagel, who recently endured some blistering critique for suggesting in his 2012 book, Mind and Cosmos, that consciousness cannot be understood by normal Darwinian evolutionary categories.

To use an example, the process of seeing is easily understood scientifically (from photons to neurons) right up to the point where a subject sees. But who is conducting the seeing? Where is the sight taking place? Neuroscientists are beginning to suggest that there is no foreseeable, materialistic answer to such a question.

Some scientists have begun to assert that the brain is not synonymous with the mind – but rather a kind of transmitter for some kind of deeply enigmatic non-material substance – something analogical to an electromagnetic wave.

If this is the case, ASI may be nothing to fear at all – simply because it is impossible on a fundamental level.

Yet even if ASI is not on the horizon, that does not alter the clear and present power and dynamism of the AI currently on hand or soon to be within reach.

For example, Nitschke asserts the incredible power AI has to make the enormous amounts of raw data which is available in our information age usable and valuable.

This is a power not to be taken lightly. This power can be harnessed for the common good, but it could equally be utilised for darker motives – health information, or deep surveillance.

In this sense, figures like Musk and Kurzweil may both be right in an unintended fashion. Our technological future may be filled with dazzling hope or apocalyptic doom, or, more likely, a combination of both.

In short, the threats and opportunities offered by the power of AI may be parallel to the threats and opportunities we have always faced in the form of our own human, non-artificial intelligence.

Nancy Pearcey and Alisa Childers had a really interesting conversation about the body-mind disconnect last week and how it leads to so much of the sad and disturbing things we are seeing in our culture: hook-up culture, abortion, homosexuality, transgenderism, euthanasia etc. All of the rely on disconnecting our bodies and minds to a degree.

And one of the interesting comments were specifically related to trangenderism, and that the end-goal is transhumanism. I think AI will have a role to play here. Your thoughts?

As to AI now... It is merely a tool (albeit a very powerful one) and how it is used depends on those developing and using it. We, the user, are fallen by nature, and while I don't doubt that it can be used for good it will inevitable also be used for evil. And the scope of that evil is quite vast from where I stand.

What about artificial love?

Or an artificial Child?

Or an artificial pet?

Fake is Fake, dumb is dumb

Snake-Oil, is Snake-Oil

Today's chatGPT LLM tecnology, is not much better than Eliza on UNIX in 1970's, just parrot, but now the lookup table is facebook&twitter posts, oh my god all the knowledge of man kind; Everything Kardashian has ever done at your fingertips, the power; The insanity

https://bilbobitch.substack.com/p/chat-gpt-meets-hal-from-2001-im-sorry